Born to do Math 162 - Golden Age to Golden Age

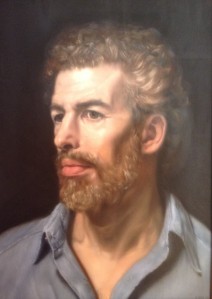

Scott Douglas Jacobsen & Rick Rosner

March 22, 2020

Rosner: So, we were talking and wondering if there is an upper limit to IQ and, more importantly, if there is an upper limit to intelligence.

Scott Douglas Jacobsen: Yes. Is it a functional question?

Rosner: All of these issues come up. In the Golden Age of science fiction, in the 40s and the 50s, that's a misnomer now. Obviously, we live in science fiction now. Obviously, this is, by a more reasonable definition, the Golden Age of science fiction.

But in the Golden Age of old school science fiction, there were stories written with people in the 50s not skeptical of IQ yet, You had novels like Brainwave, where everybody on Earth suddenly has their IQ multiplied by 5.

There is some other story, where this baby accidentally comes into contact with a ray gun from the future and has his IQ cubed. So, it was an easy shorthand for people becoming fantastically intelligent. The idea of IQ as it was used without skepticism in the 50s kind of made people think without thinking much.

That you could just turn IQ up like the volume knob in Spinal Tap. You could just keep going. If you look around online, then you could find all sorts of questionable claims being made for maximum possible intelligence.

An intelligence that would span the entire universe or seeing claims that Jesus was the smartest man who ever lived with an IQ of 300... or more.

Jacobsen: [Laughing].

Rosner: I would argue against unlimited intelligence. In that, the possibility of there being unlimited intelligence because everything that could be analyzed through intelligence or cognition has its own limits to complexity.

That some systems are just not that complicated themselves like arithmetic. If you had unlimited intelligence, then you could go from arithmetic to every other area of math and then build the entire edifice of math in a short amount of time if you had an IQ of 1,200 or something.

But in practical terms, you don't go from buying three apples at the grocery store to uncovering the entire structure of mathematics or going from figuring out what some creepy guy says when he tells a woman that she has "nice gams" in 1952.

It doesn't take that much intelligence to figure out what the meaning and then the subtext. Most things aren't that complicated. We're about to enter an era of big data and A.I. analytics. A.I. is going to find patterns and principles that are way beyond unaugmented humans' abilities to principles and patterns.

In that way, we will have an explosion in intelligence. Is that without limit? Is it really meaningful, as you were asking at the beginning of the discussion, because it is contextual? We will have super powerful analytics in a world that's propelled by those analytics.

That, itself, the analytics, will find natural complexity beyond anything that we have ever seen before. It will also be contending with its own complexity. Yet, the whole world of computation and analytics will become - not alienated - far from humanity, at least at the start, that you can't apply human concepts to it.

But at some point, things will become so far... I've talked myself into a bunch of corners. The only tool that we have for assigning a number to human intelligence is IQ. It is really sucky. In the future, if you consider computers, we don't consider computers smart. We consider them powerful.

We have all these numerical indices for comparing power and speed, and counting power and speed, of computers. In the future, IQ will go away. As we understand thought better and how it applies to computation, we will come up with a bunch of increasingly reasonable or increasingly descriptive and accurate numerical indices for the power of cognition.

IQ will be made obsolete. Seeing those future indices, future entities will score higher and higher on these indices. So, in that way, there may be no upper limit to intelligence. Although, at the very craziest levels, there will be practical, not limits but, hurdles to overcome.

We have talked about the Dyson Sphere, which is when an entity becomes so powerful and energy-hungry. It constructs a sphere around the star to capture its energy. The sphere will be a giant cognitive entity or set of entities.

It won't be a computer because computers aren't as powerful as cognitive entities, which will take technology and use this in more powerful ways than computers do. It is like a computer, like a giant computer, if you believe something like that will happen with something like a Dyson Sphere constructed.

A Dyson Sphere may not be the best structure to build. In that, it would be tough to manage a sphere with the radius of Earth. That a super-powerful civilization takes all the planets, not the radius of Earth, but the radius of the distance of the Earth to the Sun to the Earth. A powerful civilization takes all the planets and asteroids that it is not using and then deconstructs them and then reconstructs them into this giant sphere.

It may not be the structure that ends up being built. Some huge solar system-sized structures may be built for the future expansion of civilizations' computational abilities or civilizations may try to move around or into the black-ish hole at the center of a galaxy.

In that, the scale of space may be smaller there. You may be able to do more calculations faster. A governor on calculations becomes the speed of light or the speed of electricity within a computer. That's a speed limit.

Unless, you can mess with the scale of space. The technology that you will need to do something like that will effectively, at various times in our future, put limits on how much computational power you can have.

It is similar to the limits we're running into now, as Moore's Law comes to an end. In that, Moore's Law is that certain forms of computational power and capacity double every 18 months to 2 years. It has been coming to an end for the past few years because the way that you can miniaturize circuits are getting down to near atomic levels. Physical impossibilities are kicking in.

You can't just keep making stuff smaller and smaller in the same circuitry schemes. You need to invent more sophisticated strategies to increase computational power.

Authors[1]

American Television Writer

(Updated July 25, 2019)

*High range testing (HRT) should be taken with honest skepticism grounded in the limited empirical development of the field at present, even in spite of honest and sincere efforts. If a higher general intelligence score, then the greater the variability in, and margin of error in, the general intelligence scores because of the greater rarity in the population.*

According to some semi-reputable sources gathered in a listing here, Rick G. Rosner may have among America's, North America's, and the world’s highest measured IQs at or above 190 (S.D. 15)/196 (S.D. 16) based on several high range test performances created by Christopher Harding, Jason Betts, Paul Cooijmans, and Ronald Hoeflin. He earned 12 years of college credit in less than a year and graduated with the equivalent of 8 majors. He has received 8 Writers Guild Awards and Emmy nominations, and was titled 2013 North American Genius of the Year by The World Genius Directory with the main "Genius" listing here.

He has written for Remote Control, Crank Yankers, The Man Show, The Emmys, The Grammys, and Jimmy Kimmel Live!. He worked as a bouncer, a nude art model, a roller-skating waiter, and a stripper. In a television commercial, Domino’s Pizza named him the "World’s Smartest Man." The commercial was taken off the air after Subway sandwiches issued a cease-and-desist. He was named "Best Bouncer" in the Denver Area, Colorado, by Westwood Magazine.

Rosner spent much of the late Disco Era as an undercover high school student. In addition, he spent 25 years as a bar bouncer and American fake ID-catcher, and 25+ years as a stripper, and nearly 30 years as a writer for more than 2,500 hours of network television. Errol Morris featured Rosner in the interview series entitled First Person, where some of this history was covered by Morris. He came in second, or lost, on Jeopardy!, sued Who Wants to Be a Millionaire? over a flawed question and lost the lawsuit. He won one game and lost one game on Are You Smarter Than a Drunk Person? (He was drunk). Finally, he spent 37+ years working on a time-invariant variation of the Big Bang Theory.

Currently, Rosner sits tweeting in a bathrobe (winter) or a towel (summer). He lives in Los Angeles, California with his wife, dog, and goldfish. He and his wife have a daughter. You can send him money or questions at LanceVersusRick@Gmail.Com, or a direct message via Twitter, or find him on LinkedIn, or see him on YouTube.

Scott Douglas Jacobsen

Editor-in-Chief, In-Sight Publishing

Scott.D.Jacobsen@Gmail.Com

(Updated January 1, 2020)

Scott Douglas Jacobsen founded In-Sight: Independent Interview-Based Journal and In-Sight Publishing. He authored/co-authored some e-books, free or low-cost. If you want to contact Scott: Scott.D.Jacobsen@Gmail.com.

Endnotes

[1] Four format points for the session article:

- Bold text following “Scott Douglas Jacobsen:” or “Jacobsen:” is Scott Douglas Jacobsen & non-bold text following “Rick Rosner:” or “Rosner:” is Rick Rosner.

- Session article conducted, transcribed, edited, formatted, and published by Scott.

- Footnotes & in-text citations in the interview & references after the interview.

- This session article has been edited for clarity and readability.

For further information on the formatting guidelines incorporated into this document, please see the following documents:

- American Psychological Association. (2010). Citation Guide: APA. Retrieved from http://www.lib.sfu.ca/system/files/28281/APA6CitationGuideSFUv3.pdf.

- Humble, A. (n.d.). Guide to Transcribing. Retrieved from http://www.msvu.ca/site/media/msvu/Transcription%20Guide.pdf.

License and Copyright

License

In-Sight Publishing by Scott Douglas Jacobsen is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Based on a work at www.in-sightjournal.com and www.rickrosner.org.

Copyright

© Scott Douglas Jacobsen, Rick Rosner, and In-Sight Publishing 2012-2020. Unauthorized use and/or duplication of this material without express and written permission from this site’s author and/or owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given to Scott Douglas Jacobsen, Rick Rosner, and In-Sight Publishing with appropriate and specific direction to the original content.

No comments:

Post a Comment